Can AI help increase expression of empathy?

These days, someone seeking mental health support can find a variety of online communities to talk through what they’re feeling.

In these communities, peers are usually responding, and empathy is key.

But that’s a skill that can be tough to learn and finding the right words in the moment isn’t always easy.

Enter Artificial Intelligence technology, or AI.

A team led by University of Washington researchers studied whether AI can help peer supporters interacting on text-based online platforms respond with more empathy.

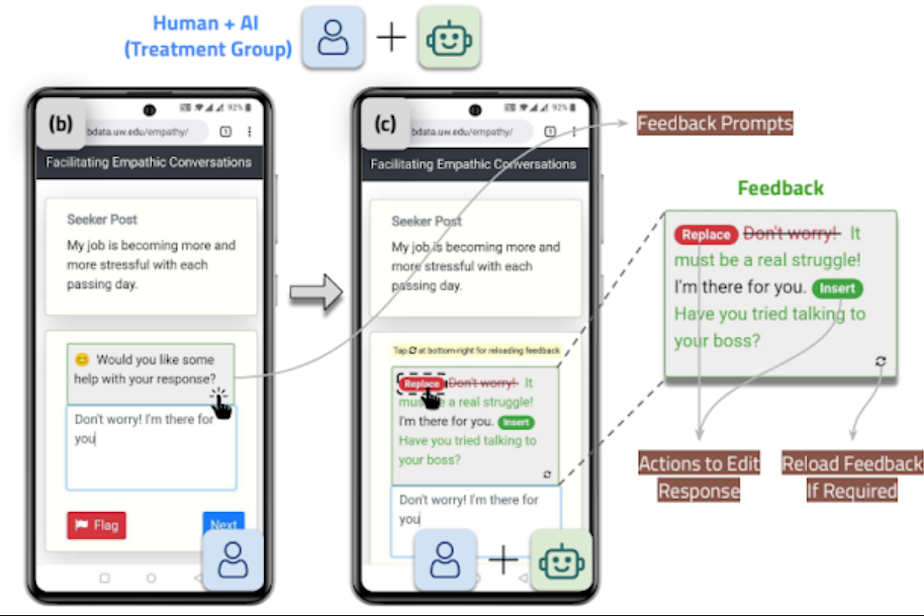

They developed an AI system to give peer supporters real-time feedback, like an editor looking over your shoulder while you type a message.

"It looks pretty similar to how, in like a word processing-type software, how you would get feedback on grammar or spelling. But it was very specific to just help people express empathy more effectively," said UW computer scientist Tim Althoff, who helped lead the study.

The study showed access to AI input resulted in a 19.6% increase in conversational empathy between peers, with even greater gains among participants who said they usually have difficulty providing support.

Sponsored

Althoff said some study results also suggested that tools like this could help train peer supporters to feel more confident in responding to people who are seeking help.

“Peer supporters, after the study, reported that they now felt more confident to support others in crisis, which was a deeply meaningful outcome to us. It suggests this increased self-efficacy,” he said.

Althoff said online peer support networks help address the problem of access in mental health support, which can exist for a variety of reasons including lack of insurance, stigma, and a lack of trained professionals in the community.

Most of the time, people interacting on these platforms aren’t trained professionals, and Althoff said there’s room for interactions to be even more effective.

He said earlier work showed that there are often missed opportunities for empathy in peer-to-peer interactions on sites like TalkLife.

Sponsored

“While fully replacing humans with AI for empathic care has previously drawn scepticism [sic] from psychotherapists, our results suggest that it is feasible to empower untrained peer supporters with appropriate AI-assisted technologies in relatively lower-risk settings, such as peer-to-peer support,” the study states.

As AI becomes more prevalent in fields like mental health, there are all kinds of ethical quandaries that arise.

One of the big ones is how big of a role AI plays.

“What is showing the best results is programs that involve the AI-human collaboration. It’s not just AI alone,” said Maria Espinola, a licensed clinical psychologist and CEO of the Institute for Health Equity and Innovation.

Espinola said it’s also critical for development of such tools to involve mental health experts, and to involve people from diverse backgrounds to ensure culturally appropriate support is available for everyone.

Sponsored

Althoff said he and his colleagues worked with clinical psychologists at multiple institutions and focused on safety and welfare of participants in study design.

They also intentionally focused on human-AI collaboration, with the AI giving suggestions instead of replacing real people and sending messages completely crafted by a machine.

Messages written during the study were not sent to real people seeking help.

Correction: An earlier version of this story misstated Maria Espinola's job title.